|

|

|

The Official Graphics Card and PC gaming Thread

|

|

AfterDawn Addict

4 product reviews

|

19. December 2010 @ 04:29 |

Link to this message

Link to this message

|

I ran my Q9550 at 1.35V to be secure at 3.65Ghz, and I know a fair few people when pushing for high overclocks were using 1.4V on them too.

The only hot intel chips I remember were the old Pentium Ds, and to a lesser extent P4s. Not really seen any cooling nightmares since Core 2, though i7s put out a lot of heat when overclocked.

|

|

Advertisement

|

|

|

|

|

harvrdguy

Senior Member

|

22. December 2010 @ 00:00 |

Link to this message

Link to this message

|

Ok you guys - I'm entering here a bit late - at the cpu power consumption discussion.

But just prior to this, there was some amazing stuff about 30" performance on 6970.

just prior to that, however:

- - - - - - - - - -

My 8800 will soon be a piece of burnt toast:

Originally posted by sam:

If 25fps is not smooth, 28fps is not smooth, they're effectively indistinguishable. It's a complete placebo, and one that will see you killing off that 8800 very fast indeed.

Hahaha.

Well - at 25fps, the game (Dragon Rising) is slightly laggy - just a bit leaden. On the other hand, 28 fps feels smooth - the lagginess appears to be gone. Same game, same chapter - I just Alt-tabbed out, chose the 621 clock and associated 10% faster shader and 10% faster memory clocks, and alt-tabbed back in.

Are you saying that 3fps can't make that much of a difference, and I am just deluding myself? DXR stated a while back that, at the expense of 10 degrees hotter temps, his GTX board would allow a mild overclock that smoothed out certain games, when he was running at the edge of his card performance. That's what I'm talking about.

Maybe you're right, but it sure seems to go from laggy to smooth.

This is one time where I can't run from one end of the street to the other, in the L4D boathouse finale, with a timeclock, and PROVE that you run forwards just as fast as backwards, and at the same exact speed no matter what rifle you are carrying.

Remember that? LOL

Lagginess is quite subjective of course. I will say this however: On all the other games, I am running at stock 594 clock, since I am getting at least 28 to 30 fps, which seems smooth to me.

For example, I finally installed Vista, just to be able to play Medal of Honor single player, which was half-crashing on opening (a sound card issue all the trouble-shooters suggested) and it wouldn't keep track of my progress. Now MOH is working under Vista, and I got Riva working with the on screen display, but the overclocking settings are not set up. However, I saw in the test that I was getting about 30fps, so I made a mental note that I would not be needing to overclock for MOH under Vista.

By the way, speaking of Vista, should I go ahead and install Windows 7 also? Is DX12 something that will impress me on any of my five new games (MOH, Black Ops, MW2 - already finished that, BC2 single player, and Dragon Rising?)

- - - - - - - - - -

Originally posted by sam:

The origin of Price and Gaz I don't think is revealed in the story. However, their voice actors, Billy Murray and Craig Fairbrass respectively, are both from east london [Bethnal green and Stepney], 21 miles from where I live, and about 15 miles from where Shaff lives :P

Both of them to an extent have the distinctive cockney accent that comes from that background.

Apart from voicing characters in the Modern Warfare games, both of them have appeared in a considerable amount of other media.

Interestingly, Craig Fairbrass played one of the roles in the film of the game Far Cry.

Wow, roughly twenty miles from you and Shaff!!! Haha - amazing!! And, like Shaff, I am startled to learn that there was a Far Cry movie!

- - - - - - - - -

Originally posted by sam:

There's one thing I forgot to mention, resolution.

All this applies up to 24" inclusive, and when AA is not so much applied.

Get to 30", especially with AA, and all hell breaks loose.

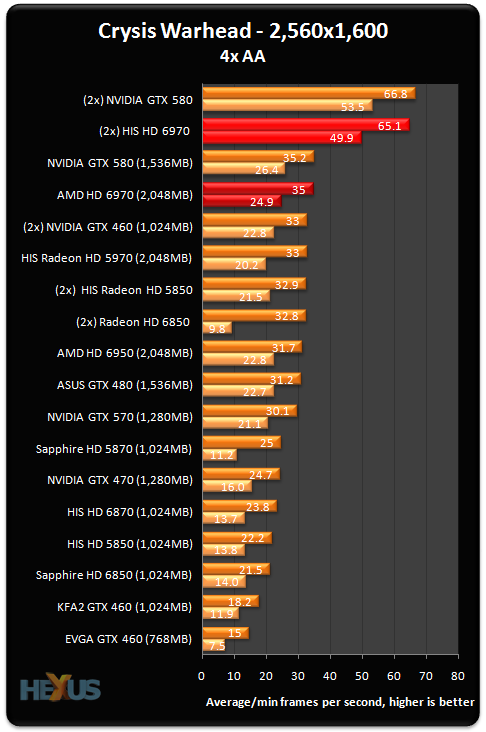

We're talking one 6970 beating dual 5970 quad crossfire configs. It's utter madness. Chart incoming.

WHAT!!!!

From "lemon - best avoided" to "utter madness." I put my wallet away, now I've pulled it back out again. Each little guy is $370 - that's $740 for two of them, at 424 watts total. Will my toughpower 750 handle two?

Quote:

Crysis Warhead

HD6950: 180-185 [noAA ->24"], 210 [noAA 30"], 190 [AA ->22"], 195 [AA 24"], 285 [AA 30"]

HD6970: 200 [noAA ->22"], 205 [noAA 24", AA 17"], 210 [AA 22"], 220 [AA 24"], 235 [noAA 30"], 325 [AA 30"] (Yes this is correct!)

Whaaaat???? So you're saying that the little buggar appears to have 325 power (relative to 4870 = 100) if you're running 30" with AA???

Originally posted by sam:

Get to 30", especially with AA, and all hell breaks loose.

We're talking one 6970 beating dual 5970 quad crossfire configs. It's utter madness. Chart incoming.

Holy crapola! That reminds me of that Phenom II russian review that Russ was pushing about 2-3 years ago, where the phenom actually beat the i7 for certain resolutions - russ said they were tuned to that specific resolution.

So, having succumbed to the Sam influence (although Sam mostly tried to hide the awesomeness) LOL, and having picked up a 30" Dell two years ago - my ears are perking up!!!!!

Quote:

The good news, is that the results of the overstress tests [for instance, running stuff like Crysis at 4xAA maxed out] are true. So in crossfire, two 6970s can take on what no other cards really can [GTX580 SLI excluding] at 2560x1600.

LOVELY LOVELY LOVELY LOVELY! Did I mention, lovely!!!

Ok, Sam. Now let's get back to 30". You sound like you're gonna trade your dual 4870x2 cards. You're gonna drop to 35 watts total idle, from 150.

You're gonna pick up 325 x 2 x 90% = about 6 4870 cards packed into two 6970s - using these numbers because you run 30" with AA. And you can't even say it's 1.5 X better than 4870 quad cf, because quad cf doesn't scale at the 90% or better range - more like around 50%, right? So are you expecting, basically, to double your performance running Warhead, for example, compared to what you have now?

I repeat, do you think my Toughpower 750 can handle two of those 6970s?

And lastly - what do you think would have been the 6970 cf fps figures in this Warhead chart you posted, at the enthusiast setting, instead of the gaming setting?

Oh - wait - one last question. I currently have 4 gigs DDR2 memory. I can add another 4 gigs for about $229 at newegg. Since the 6970 graphics cards will each have 2 gigs of memory, and two of them a total of 4 gigs, I remember you said that XP 32 bit can't keep track of more than 4 gigs total - including graphic card memory. So it sounds like for sure I need a 64 bit program - I guess Windows 7 64 bits - am I right?

So my very last question is:

1. Do I definitely need a 64 bit OS if I plan on CF 6970s?

2. Should I also double my ram to 8 gigs, or can I leave it at 4 gigs?

Rich

This message has been edited since posting. Last time this message was edited on 22. December 2010 @ 00:06

|

Senior Member

4 product reviews

|

22. December 2010 @ 01:30 |

Link to this message

Link to this message

|

if your planning on using more than 3.25GB of RAM then yes 64-bit OS is a must.

more ram is never a bad thing but i find 4 Gig's to be sufficient for W7 x64. if you got 234 bucks to blow though don't let me stop you.

who makes your mobo's chipset, if its NForce you cant run crossfire on it. im sure you already knew that but you mentioned a 8800.

Powered By

This message has been edited since posting. Last time this message was edited on 22. December 2010 @ 01:36

|

AfterDawn Addict

7 product reviews

|

22. December 2010 @ 01:41 |

Link to this message

Link to this message

|

I spent half that on my 8Gb of ram :p Perfect timing I guess. Though I'm probably gonna sell it soon...

To delete, or not to delete. THAT is the question! |

AfterDawn Addict

4 product reviews

|

22. December 2010 @ 08:13 |

Link to this message

Link to this message

|

Yes. While there is a perceptible difference between 25 and 28fps, it is so slight that it would never lead anyone to say it was smooth instead of laggy. The only way that comes about is if that's what people want to think happens, and because they see a tiny detectable improvement, that's what they think to themselves they are seeing. In short, a placebo.

DX11 makes a bit of a difference, but only really because it incorporates DX10. Graphically-speaking DX11 does almost nothing yet. However, several games that use DX11 do not distinguish DX10. What this means is, if you have DX11 installed, you can play in DX11. If you have DX10 installed, you can only play in DX9, as there is no intermediate mode. And DX10 vs DX9 does make a difference.

Obviously, you can only get DX11 with the GTX400 series or HD5000 series and newer, the 8800GTX certainly isn't capable of it. My 4870X2s aren't even capable of it.

The 'lemon' concept is a question of price. Compared to the GTX580, the HD6970 is still a bit of a lemon. It doesn't really have as much of a power advantage as Radeons have had previously, and it's still definitely an inferior to the 580, performance wise.

However, fact is, the 6970 is in stock, and $370. Compare that to the GTX580 at $510 and still in minimal stock. Things look a bit brighter when you consider this.

Overall the 6970 is indeed faster than the GTX570, by about 10%. Good news, as it's only $20 (6%) more expensive, and uses slightly less power (210W vs 230-270W).

However, on their own, the high-end Radeons are nothing to shout about. Nothing to shout about at all. The reason for this is just how capable crossfire configurations are across the board with the HD6 generation.

Now, the HD6800s with 1GB of video memory and smaller GPUs, can't cope with high levels of AA at 2560x1600. They are limited to 4x and sometimes 2x MSAA in games due to this. However, at smaller resolutions like 1920x1200 this obviously doesn't apply. And when memory restrictions don't apply, HD6800 crossfire configs simply pancake everything else there is out there.

Geforce GTX570: $350, 252W(ave), Performance Index 194

HD6850 Crossfire: $360, 254W, Performance Index 292

Radeon HD6970: $370, 212W, Performance Index 212

HD6870 Crossfire: $480, 302W, Performance Index 332

Geforce GTX580: $510, 290W, Performance Index 230

HD6950 Crossfire: $600, 340W, Performance Index 374

GTX570 SLI: $700, 504W (ave), Performance Index 350

HD6970 Crossfire: $740, 424W, Performance Index 412

There's simply no compare to the crossfire configs, at all.

The performance of the HD6900s doesn't really show up until high resolutions, however, where they are basically unphased with maximum detail and huge levels of AA.

It's worth bearing in mind the huge 325 figure only applies for Crysis Warhead and not most other games. However, this does highlight the fact that as games get more demanding, the HD6970 will cope better than anyhting else out there.

There's no such thing as a particular resolution for CPUs as CPUs don't render graphics at all. The only possible fact I could link that to is that below a certain resolution, the graphics cards are bottlenecked by the CPU, as they're producing such a high framerate. Presumably the 'specific resolution' refers to the point at which this bottleneck is no longer an issue, i.e. the graphical stress is so high the CPU is not the limiting factor. Considering this is different for every graphics/CPU pairing, there is no 'tuned to a specific resolution'. Not for games at least. Video rendering is obviously a completely different story.

You can't really use the '325x2x90%' to work out how the cards run overall, merely such in crysis. And as you see from that graph, it's actually x100%.

In warhead, the 4 4870s scale roughly about 200-220% from what I can gather, so I'm looking at going from 300-320 to 650. For Warhead, specifically. Other games won't see such an increase. So yeah, I'll be doubling my frame rate in warhead.

As for enthusiast, this is a bit of guesswork, but I would hazard a guess at (Minimums)

HD5970: 2fps

HD6970: 14fps

CF6970: 28fps

SLI580: 32fps

Running warhead in 32-bit windows is impossible. The game needs so much memory you could have all the graphics power in the world and the game still run horrendously. 8GB would be the bare minimum for Warhead, ideally 16GB, and obviously, 64-bit windows.

You will want an SSD with your page file and preferably also the game install on it, as the game will be reading/writing tens of gigabytes of data whilst you are playing it.

64-bit windows is effectively mandatory for one 1GB graphics card, let alone two 2GB cards.

4GB memory you can 'get away with', but I will put it out there, that even if I reduce the detail level and turn off AA so my 4870X2s can cope with the game, it is unplayable on my system because I only have 4GB of RAM.

DXR is also right, I can't remember which board you have. If it's an nforce board, you can't use crossfire and will need a new board.

For the record, the RAM I paid £101 for in February is now £44. Sucks eh? :P

|

AfterDawn Addict

7 product reviews

|

22. December 2010 @ 12:47 |

Link to this message

Link to this message

|

Wow, Warhead is a Ram hoard eh? And I thought GTA IV was a hoard LOL!

To delete, or not to delete. THAT is the question! |

AfterDawn Addict

|

22. December 2010 @ 13:02 |

Link to this message

Link to this message

|

MGR (Micro Gaming Rig)

MGR (Micro Gaming Rig) .|. Intel Q6600 @ 3.45GHz .|. Asus P35 P5K-E/WiFi .|. 4GB 1066MHz Geil Black Dragon RAM .|. Samsung F60 SSD .|. Corsair H50-1 Cooler .|. Sapphire 4870 512MB .|. Lian Li PC-A70B .|. Be Queit P7 Dark Power Pro 850W PSU .|. 24" 1920x1200 DGM (MVA Panel) .|. 24" 1920x1080 Dell (TN Panel) .|. |

AfterDawn Addict

4 product reviews

|

22. December 2010 @ 16:55 |

Link to this message

Link to this message

|

Omega: Yeah GTA4 is a ridiculous memory hoard, but it doesn't leak memory like Crysis and Warhead do, so if you're playing it in a large map for a prolonged period, GTA4 will be using less memory. I'd still advocate at least 8GB to enjoy GTA4 properly, ideally 12, but unlike Crysis, 16GB would probably be slight overkill. Given how memory prices have sunk recently I intend to up to 12GB when I get the HD6970s.

Shaff: Placebos vary with perspective though, and I'm sure Rich's perspective would change if his 8800 prematurely expired before he could afford to replace it. If it was a case of 'the upgrade is in the post', or if there was no downside to overclocking a card, then sure, go nuts, but the fact is, the risk of failure is high enough as it is with an 8800, let alone when you're overclocking it and its already past its use-by date.

It does amuse me how you manage to find the most negative benchmarks from the set.

A lot of sites vary wildly with test results from others using the same game, and when this occurs I look to the sites that have the best testing methodology. The benchmarks from both HardOCP (but not exclusively as they test at 2560x1600) and thetechreport detail that the HD6970 is below the GTX580, but above the GTX570.

I calculated the averages from two benchmark suites, and it comes out as

HD5870 180

GTX480 190

HD6950 191

GTX570 194

HD6970 212

GTX580 230

So on that basis, I treat the HD6970 10% above the GTX570.

Taking the hardwarecanucks tests you posted:

Avp: HD6950 wins against GTX570 (++)

BBC2 (Known to be biased down against the HD6 series): HD6970 marginally behind GTX570 (-)

DiRT2 (Known to be biased down against the HD6 series): HD6970 approximately equal to GTX570 [ignoring tests with no AA, as with cards this powerful there is no reason not to use it]

F1 2010: HD6970 negligibly ahead of GTX570 [contradictory to several other tests of this game]

Just Cause 2: HD6970 considerably ahead of GTX570 when AA is applied, even with it disabled (+)

Lost Planet 2 (Considerably nvidia biased): HD6970 considerably behind GTX570 (--)

Metro 2033 (known to be slightly biased): HD6950 equal to GTX570 (+)

Sum the pluses and minuses and I come out with 4 pluses versus 3 minuses, so on balance the HD6970 is indeed superior to the GTX570, if only slightly, but given the inclusion of nvidia-biased games, that's not strictly an accurate test.

The positioning of the cards only re-affirms my earlier statement though, if you actually read it, which is that buying a single HD6900 card is the wrong way to do it. You either want one HD6800 for low-end, two HD6800s for high-end and two HD6900s for top end. It's crossfire that brings the big advantages of this generation due to its epic scaling, and it's crossfire that gives the HD6900s the GPU power to support their enormous potential.

Hexus aren't included because they quote a lot of false facts in their reviews, such as the HD6970 being £40 more than the GTX570. The HD6970 is actually cheaper than the 570, quite significantly so, in fact.

This message has been edited since posting. Last time this message was edited on 22. December 2010 @ 16:56

|

AfterDawn Addict

|

23. December 2010 @ 00:21 |

Link to this message

Link to this message

|

again with this bias stuff. really who sets the standard on which game is biased?

http://techreport.com/articles.x/20126/16

tech report, overall 570=6970.

so what if a title is "biased"? over all we can see the cards are the same.

also negative? they really arnt negative, all they show is what they report on. just becuase its not pro AMD doesnt make it negative.

the best GPU releases this year IMO haev been all nvidia (GTX 5 series and 460), and withthe GTX 560 about to hit, it should continue.

witht he 5 series, wasnt metro highly biased? whats changed is the tesselator. it utilises it, and the 5 series had IICR 1 unit.

fermi on the other hand is based around it. you call it biased, i call it hardware fail.

With battlefield, every site i have seen seems to show they but heads, how is either of that biased?

while i agree FPS for price dual cards are better, its still a problem with new games, noise, power consumptiona nd future upgrades.

while i do say the GTX5 are better than the HD6, its in comparission to their previous series. cmpared to each other, most are exactly on par. no difference, just get which ones you prefer. but overall both are flops, and TSMC are to blame.

really it i think we shouldnt be arguing green vs red, but arguing who is oging to fill that 3rd spot to push these two.

MGR (Micro Gaming Rig)

MGR (Micro Gaming Rig) .|. Intel Q6600 @ 3.45GHz .|. Asus P35 P5K-E/WiFi .|. 4GB 1066MHz Geil Black Dragon RAM .|. Samsung F60 SSD .|. Corsair H50-1 Cooler .|. Sapphire 4870 512MB .|. Lian Li PC-A70B .|. Be Queit P7 Dark Power Pro 850W PSU .|. 24" 1920x1200 DGM (MVA Panel) .|. 24" 1920x1080 Dell (TN Panel) .|. |

|

harvrdguy

Senior Member

|

23. December 2010 @ 01:14 |

Link to this message

Link to this message

|

Well, good news for me - I don't have an nvidia board - it's an asus P5E x38 chipset, and I've got 16 lanes of pci-e for each of two graphics cards in crossfire.

It sounds like Shaff and DXR are both behind the concept that a few fps can smooth out a laggy game, and improve playability significantly. We all know that 24.7 fps fools the eye (or some such figure close to that) regarding motion pictures.

Playing Dragon Rising, I just happened one day to notice that I had dropped down to the low 20s - it was the mission where you go down and blow up the pump at the refinery. I think the extra demand was because the huge oil tanks were in view - so more going on in the screen than usual. I dropped from 4x AA down to 2xAA, and managed only to bring the fps up to 25 - at the bare threshhold for fluid motion, speaking of movies. The laggyness was in how my mouse responded - a tiny but perceptible lag.

I thought back to the experimenting I had done with overclocking, and selected the first overclock step from the 594, which was the 621 - it's the next core clock up. Not much of a step, but the shader and memory clocks were about +100 from stock, about a 10% gain.

This improved my fps to 28 - and the slight mouse lagginess disappeared. I won't argue that I do seem to have quite a tolerance for lag - seeing how I played quite a bit of Grand Theft Auto IV at around 12 fps - killing off all the bad guys on the police wanted list, etc., and completing many missions.

Anyway, I suppose we've beaten that subject to death. You're right of course, if I kill off my 8800GTX before I am able to pop for at least the first 6970, then that will chill my game playing for a bit. But the weather is quite chilly, and I added another little 80mm exhaust over the hole I created removing the 3 slot covers below the 8800 card, and I put the extra kaze in the case blowing on the 8800, instead of the 1600 rpm scythe - it makes quite a racket and also a vibration, but the case closes up and I can't hear any of that through the headphones. So the 8800GTX runs below 90 degrees. I know that the overclocking is supposed to be quite damaging, even at low operating temps, but I'll take DXR's experience with the GTX series, as he had good luck overclocking for quite a while.

------------------

Now getting back to the twin 6970s - I am quite excited - obviously because I am in the 30" camp. So selfishly I don't really care that the 6970 doesn't really shine for 24" play - I pretty much only care that it becomes a BEAST for AA and 2560x1600 play. And let me ask you - WHY EXACTLY does it do that? (And you mentioned same is true also of nvidia 570 and 580.)

I appreciate your advice about memory, suggesting 12 gigs. Unfortunately the max memory I can utilize on this motherboard is 8 gigs, and I can get the 8gigs DDR2 for about $229 (gskill) on newegg.

I also appreciate the Warhead advice about the SSD for page file, and maybe for the entire game - good idea.

So I was correct - you are going to double your graphics power at the 650 number, realizing 100% scaling - awesome!! Assuming I can follow suite with two of those cards myself, 8 gigs of memory, 64 bit Windows 7 - I am going to assume that my present psu - your old Toughpower 750, will handle the load with my Q9450 cpu. Am I correct?

How important will it be to overclock the Q9450 up to 3.2 or beyond from the present 2.66, for good frame rates on warhead, with the 2x6970 configuration and 8 gigs of ram, and will my toughpower psu still handle the load including the cpu overclocking? I have two disk drives, and by then, depending on price, I'll have the SSD also, but I assume, maybe incorrectly, that the power draw of an SSD is marginal.

Also, you guestimated 28 fps with 6970cf on Warhead at enthusiast settings. Are you planning to try to play the game at enthusiast settings with your i5 and see how it plays - at least in the early chapters before the ice?

Rich

|

Senior Member

4 product reviews

|

23. December 2010 @ 04:58 |

Link to this message

Link to this message

|

|

Over clocking an Nvidia graphics card is like lighting a fire inside a room made of dried wicker vines. is it a good idea, hell no. is it fun to see how long you luck runs, hell yes.

the only reason i OCed the GTX460 is because it seems to run a hell of a alot cooler than the 8800 series ever did. not to mention it was already factory over clocked.

Powered By

|

AfterDawn Addict

4 product reviews

|

23. December 2010 @ 07:10 |

Link to this message

Link to this message

|

Shaff: A game that is biased is one that does not follow a pre-established trend of performance. We have an accepted baseline for older cards to use as reference, and if a game shows that, for example, the GTX260-216 and HD4870 which we know are equals, to be mismatched, i.e. one faster than the other, then the game is biased. The same goes for a few other pairings as well.

There are some AMD-biased titles out there as well, not many but they do exist, and that's also considered in testing.

You can't simply write off bias and consider biased games as 'that's just another one of the tests', as where do you stop? TWIMBTP titles with 20% bias? Lost Planet 2 with 40% bias? HAWX 2 with 115% bias?

Including such titles in an average score completely changes the outcome of a benchmark suite.

From techreport:

"Holy moly, we have a tie. The GTX 570 and 6970 are evenly matched overall in terms of raw performance. With the results this close, we should acknowledge that the addition or subtraction of a single game could sway the results in either direction"

While they have at least excluded HAWX2, Lost Planet 2 is included in that test. Its removal places the HD6970 where it actually performs, a few percent above the GTX570.

Meanwhile, the HD6950 performs a few percent below it.

The best GPU releases this year have not all been nvidia. The GTX460 was well placed at the time, but nothing exemplary, as it was no faster than the HD5830, no more efficient, and not really any cheaper. To this day a 1GB GTX460 costs more than an HD5830 did towards the end of its life.

Then the HD6850 came out, which is agreed by the majority of unbiased people to have been the best card of the year. It's the same price as a 1GB GTX460, considerably faster, and considerably more efficient, at 127W vs 170W.

Rating the GTX5 cards as 'better' is laughable.

You could potentially argue that the GTX580 was worth buying before the HD6900 came out, but now it's a complete waste.

9% extra performance, for 80W more power, $150 more cost, and being nearly impossible to find? Are you mad?

Same goes for the GTX570, 60W more power for no extra performance at all over the HD6970. It being a 1280MB card doesn't really help either.

As I keep saying though [and you keep ignoring] I don't think any of these cards are worth buying solo. What you really want is two HD6850s, as they way outstrip anything else in their price, power and power-connector sector. Better scaling and indeed reliability from this gen means that it really is the best option.

Two HD6850s are the same price as a GTX570, sometimes cheaper, typically a massive 50% faster, are 6+6 not 6+8, and only use 254W max, versus the GTX570 which can go up to 270W. There's simply no contest. Find me a major game that isn't HAWX 2 where a GTX570 beats two 6850s. Go find one.

You will notice that while Metro 2033 was biased initially, the more complex HD6900s have been able to negate the bias and actually perform beyond the level of the equivalent geforces.

Battlefield is one title that takes an unusual turn, as unlike the other games it's not biased from the outset through coding, the drivers for the game are simply bad AMD-side, and in reality, they always have been. However, again, see earlier comment about 6850s, they're 20% on top of the 580, let alone the 570.

Even AvP which was definitely nvidia-biased at one stage sees the HD6970 perform a distinctive percentage above the GTX570, and close behind the 580.

September 2009 [HD5 launch] - April 2010 [Fermi Launch]:

£200: HD5850

£300: HD5870

£450: CF5850

April 2010 - July 2010 [GTX460 launch]:

£200: HD5850

£300: HD5870

£450: CF5850

£800: SLI480 [not really worth it!]

No change mainly, because the GTX465, GTX470 and GTX480 were all terrible.

July 2010 - October 2010 [HD6800 launch]:

£150: GTX460

£200: HD5850

£300: HD5870/SLI460 -> SLI460s was pretty good here, IF you ran low resolutions. 768MB per GPU was a nogo for 30" res.

£400: CF5850

October 2010 - November 2010 [GTX580 launch]:

£150: HD6850

£200: HD6870

£300: CF6850

£400 for CF6870 isn't really worth it, so I held people off here in anticipation of the HD6900 launch.

November 2010 - December 2010 [GTX570 and HD6900 launch]:

£120: GTX460

£150: HD6850

£190: HD6870

£300: CF6850

£450: GTX580 [not really worth it]

£900: SLI580

December 2010 - January 2011 [GTX560 launch]:

£140: HD6850

£220: HD6950

£280: CF6850

£440: CF6950

£920: SLI580 [again, not worth it]

Barring the GTX460 for low-end, there's no occasion where using a geforce has been worth it in the high-end performance sector at all. The cards are too underpowered for their ridiculous price tags and power consumption. To say that they're better isn't a matter of opinion, it's simply false.

You can argue what you like from a technological perspective, but until there's a midrange card as good as the HD6850 from nvidia that scales as well, and until the GTX580 is readily available and loses at least £100 from its price tag, Radeons are the only products to buy. I'm sorry but that's simply how it is.

Rich: Not actually true on the 24.7fps front. Primarily because your monitor isn't producing 24.7fps, it's producing 60fps. Just, because your PC isn't putting out that much, some of those 60 frames are the same. Your PC might happen to finish rendering a frame just after the monitor has sent one that's the same as the first one, so what you're actually seeing is a frame rate that's lower still. The human eye does notice this, and it's why I can distinguish lag all the way up to 60fps.

This also doesn't cover microstutter, which can happen in dual graphics scenarios sometimes [Thankfully less often with modern cards]. Frame rate can jump wildly between frames, literally each odd frame taking twice as long to render as even frames, for example. This doesn't get picked up by FPS counters, but it does notice in the real world. With CF (and SLI) it's conceivable to notice a game lagging as high as 100fps.

In Left 4 Dead 2 for example, the only thing that microstutters typically is the fire effects. Stare up-close at a molotov going off, the fps counter says 80, but it feels more like 40. It's perceptably not smooth. It's hardly appalling, but it's noticeable nonetheless.

It stands to reason that you don't want microstutter if you're getting a low frame rate too.

Rich: turning AA down from 4x to 2x doesn't reduce required performance much, but it does reduce video memory a fair bit. The 8800 probably isn't out of video memory at 4x, just not powerful enough to render the effect, so that will be why you don't see much of a benefit in FPS.

Dragon Rising, along with a few other titles, uses a frame-delayed cursor. This means, when you send a command, the game queues it, for example, 3 frames behind the current action, so 3 frames have to be rendered before the screen pans round to where you moved. At 100fps, this is 30ms, a largely imperceptible delay. At 20fps, this is 150ms, which is quite a substantial period of time, slower than the fire rate of most of the automatic weapons in the game.

Games that use this annoy me, as you have to be putting out a very high FPS not go get this delay, even if the game seems smooth without one.

You're right that overclocking does damage at lower temperatures than standard. 'lower than 90 degrees' in the concept of a Radeon is 'you can probably run the fan a bit quieter than that'. 'lower than 90 degrees' in the concept of a stock geforce is 'the absolute maximum'. 'lower than 90 degrees' in the concept of an overclocked geforce is 'that's not low enough'.

The HD6970 takes on 2560x1600 so well for the same reason the GTX400s take on 2560x1600 so well. Apart from having 2GB of video memory so it never runs out, the architecture is BIG. Actual maximum output isn't so high because the clock speeds of the GPU aren't enormous and neither is the number of cores, but the actual cores themselves are very complex, and designed in such a manner that they're unphased by huge workloads.

Buying 8GB for $230 is a bit barmy, as you could buy a new board for a Core i5 or i7 AND 8GB of RAM for that much.

With the Q9450 stock the 750W Toughpower should be OK, but it will be being loaded quite heavily. With the CPU overclocked [you will want that for Warhead and crossfire, I assure you], you're probably pushing things.

You'll probably be pulling around 700-720W ac, so 600-620W DC out of the unit. Not max, but remember this is still a Thermaltake PSU, even if it does have CWT internals, and I'm not sure I like the prospect of loading one so high, especially if it's in a hotbox environment. [A hotbox refers to a case whereby the PSU sits at the top sucking in heat from the CPU area. The far better way of running a PSU is in the bottom of the case facing downwards, pulling floor air from a vent and being isolated from other components]

I'll try running warhead with the 6970s no doubt, but because I only have 4GB of RAM I haven't even been able to get the game to load the level at enthusiast settings, because so little of the game [2.8GB/12.5GB] fits in RAM, it can't actually store enough to render a single frame.

DXR88: Depends which 8800. The coolers on the pre-overclocked GTX460s can be extremely beefy [they're the same coolers used on HD5870s and sometimes even GTX470s] hence why they take so much load.

GTX460s overclocked to the limit around 940mhz put out over 250 Watts, a dramatic increase from their typical 170 at stock, and as much as two HD6850s put together!

|

AfterDawn Addict

15 product reviews

|

23. December 2010 @ 07:20 |

Link to this message

Link to this message

|

For the record the HD6850 is one of the most significant cards released since the 8800GT. Definitely enjoying the scaling. One of very few deals where it was actually worth it in the end to get a sidegrade.

I think I pinched my pennies close enough to make the cost pocket change. Guy who bought my 5850s says he's keeping them as a "mating pair" lol XD. Gotta admit I felt bad about breaking my 4870s up because they were truly a pair and had never been run separately. Shoot me but computers have a certain soul to them you really learn to feel out after a while. Crossfired video cards are something you don't readily split up.

Really is a funny cycle though. I basically have to go Crossfire to get a worthwhile upgrade now. 4870s, 5850s, and now 6850s, it really is addictive :D Also, 4850s would have been cool.

AMD Phenom II X6 1100T 4GHz(20 x 200) 1.5v 3000NB 2000HT, Corsair Hydro H110 w/ 4 x 140mm 1500RPM fans Push/Pull, Gigabyte GA-990FXA-UD5, 8GB(2 x 4GB) G.Skill RipJaws DDR3-1600 @ 1600MHz CL9 1.55v, Gigabyte GTX760 OC 4GB(1170/1700), Corsair 750HX

Detailed PC Specs: http://my.afterdawn.com/estuansis/blog_entry.cfm/11388This message has been edited since posting. Last time this message was edited on 23. December 2010 @ 07:23

|

AfterDawn Addict

4 product reviews

|

23. December 2010 @ 07:24 |

Link to this message

Link to this message

|

Once you move to crossfire, it's rarely an upgrade to buy a single card. Performance just doesn't increase that much in one, or even two generations. It's taken until the GTX580/HD6970 to actually provide an improvement over one 4870X2, albeit by a measly 28%/18%.

|

AfterDawn Addict

15 product reviews

|

23. December 2010 @ 07:34 |

Link to this message

Link to this message

|

Which is why I love my video cards. Overkill for your resolution is the only way to go if you ask me. Crysis maxed? I say lol lets do it :P Don't even use my cheap settings anymore. Just crank it and enter all the little performance tweaks. Turn off edge AA, move physics to the 4th core, slightly higher LOD for distant textures, etc. Just to smooth it all out.

And don't even get on me about it because Crysis is an awe-inspiring showcase of technology.

AMD Phenom II X6 1100T 4GHz(20 x 200) 1.5v 3000NB 2000HT, Corsair Hydro H110 w/ 4 x 140mm 1500RPM fans Push/Pull, Gigabyte GA-990FXA-UD5, 8GB(2 x 4GB) G.Skill RipJaws DDR3-1600 @ 1600MHz CL9 1.55v, Gigabyte GTX760 OC 4GB(1170/1700), Corsair 750HX

Detailed PC Specs: http://my.afterdawn.com/estuansis/blog_entry.cfm/11388This message has been edited since posting. Last time this message was edited on 23. December 2010 @ 07:39

|

AfterDawn Addict

4 product reviews

|

23. December 2010 @ 07:39 |

Link to this message

Link to this message

|

Not really such a thing as overkill for 2560x1600 yet, unless you count the resolution itself :P Could use a UD9 board and go with three GTX580s or four HD6970s, but for £200 more for the board and £560 or £820 more for the cards, it's not really worth it for the extra 50% performance! Even so, I don't think that's really overkill for the resolution. Crysis might be almost smooth maxed out, not far off at least, but other games, probably not so much. Certainly not Arma 2, Cryostasis, Lost Planet 2 or whatever the other one was, I forget :P

|

AfterDawn Addict

15 product reviews

|

23. December 2010 @ 07:46 |

Link to this message

Link to this message

|

Actually Lost Planet 2 is quite reasonable on my setup. As well as Metro 2033 which is maxed with advanced DoF turned off and AAA, scoring about 60FPS average which is near 100% scaling.

Cryostasis is a fluke and Arma2 is terribly coded, though retardedly good looking when cranked. My point being the few games I can't run I'm mostly not interested in so it's of little consequence to me. As far as my current performance needs its overkill for everything except BC2 silly maxed with 4xAA which it runs admirably at 50-70, sometiems much higher. Basically what I consider the sweet spot given my large CPU bottleneck in a lot of the game. I think it's about 60-80% scaling depending on the area.

AMD Phenom II X6 1100T 4GHz(20 x 200) 1.5v 3000NB 2000HT, Corsair Hydro H110 w/ 4 x 140mm 1500RPM fans Push/Pull, Gigabyte GA-990FXA-UD5, 8GB(2 x 4GB) G.Skill RipJaws DDR3-1600 @ 1600MHz CL9 1.55v, Gigabyte GTX760 OC 4GB(1170/1700), Corsair 750HX

Detailed PC Specs: http://my.afterdawn.com/estuansis/blog_entry.cfm/11388 |

AfterDawn Addict

|

23. December 2010 @ 08:18 |

Link to this message

Link to this message

|

|

oi vey. Whyy sam whyy? Such a LONG arse post. Will get back to it later. Or infact its so long i will just not reply right now. Must you extend it so much? Haha.

MGR (Micro Gaming Rig)

MGR (Micro Gaming Rig) .|. Intel Q6600 @ 3.45GHz .|. Asus P35 P5K-E/WiFi .|. 4GB 1066MHz Geil Black Dragon RAM .|. Samsung F60 SSD .|. Corsair H50-1 Cooler .|. Sapphire 4870 512MB .|. Lian Li PC-A70B .|. Be Queit P7 Dark Power Pro 850W PSU .|. 24" 1920x1200 DGM (MVA Panel) .|. 24" 1920x1080 Dell (TN Panel) .|. |

AfterDawn Addict

4 product reviews

|

23. December 2010 @ 08:20 |

Link to this message

Link to this message

|

Jeff: Actually the scaling is 100% in Bad Company 2. The problem is that the single GPUs underperform, for reasons I'm not entirely sure of. The 100% crossfire scaling is what keeps the HD6s competitive with the Geforces.

Shaff: You wrote a lot I disagree with, so I wrote a lot back :P

|

|

harvrdguy

Senior Member

|

23. December 2010 @ 15:51 |

Link to this message

Link to this message

|

Originally posted by sam:

You're right that overclocking does damage at lower temperatures than standard. 'lower than 90 degrees' in the concept of a Radeon is 'you can probably run the fan a bit quieter than that'. 'lower than 90 degrees' in the concept of a stock geforce is 'the absolute maximum'. 'lower than 90 degrees' in the concept of an overclocked geforce is 'that's not low enough'.

Okay, I hear you. With the 120mm 3000 rpm kaze blowing sideways at the board, and the new 80mm sucking through the 3 removed slot covers, to be more specific, the slightly overclocked geforce seems to hang right at 84 degrees. That will continue to be true, if the board holds out, for at least the next few months until the return of hot weather. But I only overclock it for Dragon Rising, and I am sure I'll have the game finished one of these days. (I hear a sequel is coming in the summer.)

Originally posted by sam:

Dragon Rising, along with a few other titles, uses a frame-delayed cursor. This means, when you send a command, the game queues it, for example, 3 frames behind the current action, so 3 frames have to be rendered before the screen pans round to where you moved.

Fascinating! May I ask, Sam, out of curiosity, how you are able to come across this kind of detailed inside information about the workings of a game?

Originally posted by sam:

Rich: Not actually true on the 24.7fps front. Primarily because your monitor isn't producing 24.7fps, it's producing 60fps. Just, because your PC isn't putting out that much, some of those 60 frames are the same. Your PC might happen to finish rendering a frame just after the monitor has sent one that's the same as the first one, so what you're actually seeing is a frame rate that's lower still. The human eye does notice this, and it's why I can distinguish lag all the way up to 60fps.

Ah hah! I see what you mean. Quite different from a movie.

Then I suppose it all just comes down to my physiology - my reaction time, my visual sensitivity, etc. I guess that what seems "smooth" to me would be laggy for you. Now I understand more clearly why you like scenarios with frame rates in the 50s and 60s - I had thought it was only to have a buffer for when the action really heats up and the frames drop.

Originally posted by sam:

The HD6970 takes on 2560x1600 so well for the same reason the GTX400s take on 2560x1600 so well. Apart from having 2GB of video memory so it never runs out, the architecture is BIG. Actual maximum output isn't so high because the clock speeds of the GPU aren't enormous and neither is the number of cores, but the actual cores themselves are very complex, and designed in such a manner that they're unphased by huge workloads.

WOW! So then, isn't that what Fermi was supposed to do a couple years ago for the nvidia guys - run AA without a sweat? Are we saying then, that Ati has now figured out how to duplicate the advantages of Fermi?

Quote:

Buying 8GB for $230 is a bit barmy, as you could buy a new board for a Core i5 or i7 AND 8GB of RAM for that much.

Hmmmm. You know, you're right. If Warhead, on your machine, won't even load on enthusiast, because of the 4gb, so 8gb is a minimum, and 12 to 16 even better, and to top it off, my Q9450 will probably become a bottleneck - maybe I should continue to let Warhead sit in the closet until I have a couple thousand for all new components in the spedo case.

What about BC2? A multiplayer game like COD4, or BC2, could occupy me for literally years. If I thought just in terms of my present situation - Q9450, 4 gigs memory - but added to that a single 6970 and maybe later one more, plus windows 7 64 bit - what would it take for me to play BC2 at full 2560x1600, running at least 30-35 fps?

Rich

|

AfterDawn Addict

4 product reviews

|

23. December 2010 @ 16:16 |

Link to this message

Link to this message

|

Well, you understand the risks, so it's your problem to deal with when you finally kill it off :P

As for the game engine, it's really as-and-when research when I look up what causes problems. Google is a powerful tool!

Nah, you'll notice I always make a point of finding minimum frame rates for games, as an average frame rate of 60 is meaningless if on several occasions it drops down to 9-10, whereas another game that averages 60 may only drop to 45.

Minimum fps, except in a couple of scenarios where autosave causes frame rate drops to 0 or 1, is a guarantee that if it's above 30, the game will be playable, and if it's above 60 the game will be smooth.

By far the best benchmark scenario uses a graph to show the frame rate throughout the course of the test, this way you can assess yourself if frame rate figures and drops are actually relevant.

This isn't actually that hard to do, it can be done simply using Excel and Fraps.

'A couple of years ago' - it may not seem like it, but it's actually only been 9 months (as of boxing day) since Fermi first launched. Essentially, the HD6900 duplicates most of the Fermi-style architecture, lots of power, lots of memory and brute force. Not so much actual processing power at low-end, but immense staying power for high filter levels and high resolutions.

Of course, the new Radeons still can't handle excessive polygon scenarios like HAWX 2, but such games are rare, and will be for many years to come.

I would say at least try Warhead, as with all the background applications closed, and a massive page file, Warhead will play on Gamer level, albeit with long pauses every 2-3 seconds, but it just isn't fun like that.

The game is heavily CPU bound as well, maxed out even an overclocked i7 won't permit beyond 50fps (A pipe dream frame rate at 2560x1600, but nonetheless highlighting lower frame rates for weaker CPUs)

Multiplayer games, Crysis Wars excluding, are generally more forgivable than single player campaigns, as they have to run well for the game to be fair.

Battlefield: Bad Company 2 is the most demanding popular multiplayer game released to date, yet at 2560x1600 with 4xAA and max settings (including the infamous HBAO) even two HD6850s can manage a respectable minimum of 50fps in lighter scenes. Two HD6870s achieve 60, and the 77 achieved by two 6970s allows extra room for either 8xAA or more demanding scenes.

|

|

harvrdguy

Senior Member

|

23. December 2010 @ 20:58 |

Link to this message

Link to this message

|

Quote:

'A couple of years ago' - it may not seem like it, but it's actually only been 9 months (as of boxing day) since Fermi first launched. Essentially, the HD6900 duplicates most of the Fermi-style architecture, lots of power, lots of memory and brute force. Not so much actual processing power at low-end, but immense staying power for high filter levels and high resolutions.

Only 9 months! Yeah, it seems forever! Well I sure am glad that ATI got that worked out!

Quote:

Battlefield: Bad Company 2 is the most demanding popular multiplayer game released to date, yet at 2560x1600 with 4xAA and max settings (including the infamous HBAO) even two HD6850s can manage a respectable minimum of 50fps in lighter scenes. Two HD6870s achieve 60, and the 77 achieved by two 6970s allows extra room for either 8xAA or more demanding scenes.

Well, okay then. Maybe I'll soon be joining Jeff and Shaff out there on the battlefield - and on the Vietnam maps too.

So at 2560x1600, what do you figure I'll yield with one 6970 at 4xAA. From the 77fps you mentioned with CF, sounds like (except perhaps in situations of lots of explosions) I might get frame rates in the 30s - would you agree?

Is a 64bit OS still mandatory with only 1 6970?

By the way, it took me a while, but I finally found saturation, and color temperature, in CCC. It isn't in the Color tab, where one would expect it to be. Going to Display properties - there is an avivo color subsetting. I tested it - one forum guy said he used saturation at 140 and temp at 6700. I ended up with sat at 150 - just a bit more - and it added immeasurable quality and charm to a demo of BIA - Earned in Blood, out in the sunroom where the p4 is, on a 20" Dell LCD. My god - the game was so flat before I found the avivo color setting. This sounds crazy, but I will be forever grateful to Nvidia for turning me on to digital vibrance, and enhancing the game playing experience with deeper richer colors!!

The one thing I did not find, however, was anything close to the nvidia wizard for adjusting monitor color, one color at a time, across the entire screen, adjusting the gamma of each primary color band to match a dithered reproduction of the color. This apparently is what caused nvidia to juice my digital vibrance to max - producing shockingly gorgeous shades in World at War. If you are aware that Catalyst has something similar, one color at a time, please let me know and I'll dig into it again on the p4.

But I do have to say that I feel so much better having apparently found the equivalent to digital vibrance in CCC - and the forums say that the ability to adjust color temperature, from 4000 to 10000, about 400 adjustment steps, can yield some nice extra nice customizing even beyond digital vibrance.

|

AfterDawn Addict

4 product reviews

|

24. December 2010 @ 07:46 |

Link to this message

Link to this message

|

Yes, as to get 2GB of video memory, you'd be limited to 2GB of system memory. In reality this means that you'll get about 3GB of system memory - not enough to run most recent games, and 1GB of video memory being used - not enough to max a fair few games out at 2560.

You can't weasel out of buying RAM, or more usefully, upgrading the system, by buying one card, it needs replacing if you're going for any high-end card.

For colour adjustment I assume you mean this:

and for vibrance I asume you mean this:

|

|

harvrdguy

Senior Member

|

24. December 2010 @ 20:46 |

Link to this message

Link to this message

|

Originally posted by Sam:

Yes, as to get 2GB of video memory, you'd be limited to 2GB of system memory. In reality this means that you'll get about 3GB of system memory - not enough to run most recent games, and 1GB of video memory being used - not enough to max a fair few games out at 2560.

You can't weasel out of buying RAM, or more usefully, upgrading the system, by buying one card, it needs replacing if you're going for any high-end card.

Hahaha. Rich the weasel! I've been called worse, lol.

Well, ok, to ask if I could get by without going to a 64 bit O/S was foolish - I will promptly put in Windows 7. I don't know what I was thinking.

Now, with windows 7 64 bit, once I do that, my system will see all 4 gigs of system Ram, plus all 2 gigs of video ram - I assume I am correct on that - but please let me know if there's something I don't yet understand.

So, now, other than the OS, yes, I'm weaseling out of a full upgrade, by just installing ONE 6970 for now, for a very reasonable $370 expenditure.

One bench I spotted yesterday suggested 42fps in BF:BC2 - it might have been the same bench you were looking at - I think I did see the 77 with 6970 CF.

So with 42fps average, unless I have the misfortune to have Jeff or Shaff blasting me at the particular moment before I get the drop on them, which undoubtedly would reduce my frame rate, I think I could get by on that. Sure there would be some lagginess in situations of lots of explosions. But I could probably handle it. Meanwhile, Warhead stays up in the closet as unplayable for now, until I pony up and do a real system upgrade at the tune of a couple of thou.

Does that all make sense?

- - - - - - - - - - - -

Well, here's the section I found for saturation and color temperature:

It's not exactly what you found, which I see is under the Video section - for playing videos I guess. I tried the video section at first, thinking that was the correct spot, and the settings did not carry into the BIA EIB demo.

But as shown above, when I went to monitor properties, there I found the avivo color subsection that the forums told me about, and those settings DID carry into the demo. In fact, logging onto the p4 just now to pull the chart over from that computer, my "red hair" icon was unusually bright - so the settings have certainly stuck!

Regarding the other catalyst you posted, showing settings by color, yes I found that also on the p4. BUT - how do you do the setting?

What I mean by that, is: what guide do I have to help me adjust the contrast and brightness color by color? I can change the image, and I get an image that appears to have some dithering on it - but I can't really see how to work with that? Do you see what I mean? The tools are there to adjust my constast and brightness, color by color, but I have no graphic to work against, unlike with the nvidia wizard, which puts one color up, fills the entire screen, and lets me adjust to match dithering with the adjusted color - and tells me to first unfocus my eyes a bit to make it all work, lol.

Maybe that catalyst page you found would work, if I picked up a color chart and displayed it on my screen - something with some dithering on it I suppose. Would you have any idea where I could get a chart like that - and do you think that would help me?

Well - off to a Christmas eve family event - Happy Holidays Sam and Shaff and DXR and Kevin and Jeff and Red and DDP and Binkie and Russ and everybody else!

Rich

|

|

Advertisement

|

|

|

AfterDawn Addict

4 product reviews

|

24. December 2010 @ 21:05 |

Link to this message

Link to this message

|

One HD6970 performance-wise is fine. It's still a capable card on its own at 2560x1600, but it won't be maxing out every title out there with 60fps at that res.

I only referred to 'weaseling out' for using one because doing so doesn't solve the problem of needing more memory, and a 64-bit OS. If you only buy one for financial reasons that's fair, but if you only buy one to avoid buying more memory or a new base platform, you're wasting much of the talent of even one card.

It's 38fps for one 6970. The scaling is 100% for minimum fps, thankfully :P

The actual system upgrade need not be that much actually. Of course, at $740 the pair of HD6970s is a lot, but an i5 760, a P55A-UD4P and 16GB of RAM need only cost $620, and that's all you need for gaming, save the cooler.

Don't pay much attention to how your catalyst looks, the windows XP and the windows 7 catalyst layouts are completely different.

|

|